Dr Peter Burt of Drone Wars UK says that, in spite of contrary assurances, the UK is developing the components of autonomous weaponry.

Article from Responsible Science journal, no.1: online publication: 12 June 2019

In the debate about the use of armed drones we frequently find governments arguing that drones are weapons which conduct precision strikes, reducing civilian casualties. Some commentators go further, suggesting that drones are increasingly allowing ‘risk free warfare’ to be waged, with drone crews operating their aircraft from bases far from the battlefield and facing minimal risks of death or injury.

Such narratives play a part in the growing push towards the automation of military technology, and are used to justify the trend for drones to become increasingly autonomous, that is, able to operate with reduced, or even no, human input. Drone technology provides a platform for the development of lethal autonomous weapons – sometimes labelled ‘killer robots’ – able to select and engage targets without human intervention. Drones that kill are an authoritarian technology which will allow the development of new roles in warfare, drawing on their surveillance and loitering capacities and their ability to work together in swarms. Nations which uphold humane values and support democracy and human rights should be opposing the development of such technology.

The UK government says that it has “no intention to develop” autonomous weapon systems. But despite this, the Ministry of Defence (MoD) is actively supporting research into new technology which would allow weaponised drones to undertake autonomous missions.

Developments in drone technology are being enabled by advances in the fields of computing (notably machine learning and artificial intelligence – AI), robotics, and sensors able to detect objects and changes in the surrounding environment. Currently this technology is focused on simple tasks – often described as ‘dull, dirty, or dangerous’ – such as logistics and supply, or conducting search patterns. However, as the technology evolves it is gradually becoming capable of undertaking more complex operations.

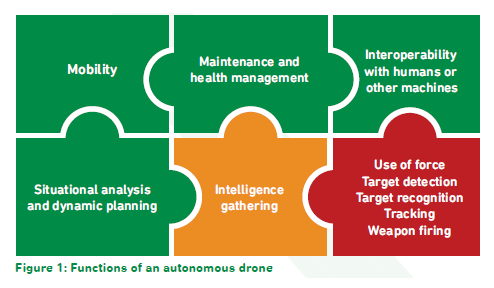

Drones are currently evolving in a ‘modular way’, and lethal autonomous weapons are likely to emerge as new combinations of existing technology rather than entirely new systems.

Drone technology provides a platform for the development of lethal autonomous weapons as advances in different areas of technology gradually allow drones to evolve to become more autonomous.

Figure 1 shows some of the functions of an autonomous drone. Advances are being made in each of these fields of development. In many of these areas, this is not an issue (green blocks). In other areas, known as ‘critical functions’ (red) which are associated with the use of force, this is a concern. Some functions, such as the gathering and on-board processing of intelligence, are a ‘halfway house’ between the two, depending on the use to which the information is put.

How much of a risk is this? Although we usually think of autonomous weapons as belonging to the realm of science fiction, the prospect of their deployment is now on the horizon. An improvised small armed autonomous drone is “something that a competent group could produce” according to Stuart Russell, Professor of Electrical Engineering and Computing at the University of California and a leading voice against the development of autonomous weapons. A working system “could then be fielded in large numbers in eighteen months to two years. It’s really not a basic research problem”.

However, such a weapon would pose a grave challenge to the laws of war (see John Finney’s article on robotics ethics). As well as legal problems, there are also significant technical risks posed by the unpredictable behaviour of such systems, potential loss of control through hacking or spoofing, the danger of ‘normal accidents’ arising in complex systems which are not fully understood, and the potential of misuse for purposes that weapons have not been designed or authorised for.

The UK’s position

Government policy on autonomous weapons was set out in 2017 in a Joint Doctrine Publication from the Ministry of Defence on Unmanned Aircraft Systems. The document gave the following definition for autonomous systems:

“An autonomous system is capable of understanding higher-level intent and direction. From this understanding and its perception of its environment, such a system is able to take an appropriate action to bring about a desired state. It is capable of deciding a course of action, from a number of alternatives, without depending on human oversight and control, although these may still be present. Although the overall activity of an autonomous unmanned aircraft will be predictable, individual actions may not be.”

The document went on to say: “The UK does not possess fully autonomous weapons and has no intention of developing them. Such systems are not yet in existence and are not likely to be for many years, if at all.”

This sounds reassuring, and has presumably been designed to be so. However, the MOD definition is based very much on the long-term potential of autonomous weapons, rather than the state of technology as it is today. The Ministry of Defence has been accused of ‘defining away’ the problems associated with autonomous weapons by setting such a high threshold of technical capability to determine them. The House of Lords Select Committee on AI points out that definitions adopted by other NATO states focus on the level of human involvement in supervision and target setting, and do not require “understanding higher level intent and direction”, which could be taken to mean at least some level of sentience. The Select Committee described the UK’s definition of autonomous weapons as “clearly out of step with the definitions used by most other governments”, which limits the government’s “ability to take an active role as a moral and ethical leader on the global stage in this area”.

This definition also allows the MOD to pretend that it is not undertaking research into autonomous weapon systems. Despite the reassurance in the Joint Doctrine Publication, research conducted by Drone Wars UK shows that the MOD is actively supporting the development of autonomous drones.

UK research into military autonomous systems and drones

The government’s Industrial Strategy, published in November 2017, describes artificial intelligence, data, and robotics as priority areas for future investment. Consequently, artificial intelligence and robotics are also funding priorities for the Engineering and Physical Sciences Research Council (EPSRC). The position is similar inside the Ministry of Defence. The October 2017 Defence Science and Technology Strategy described autonomous technology and data science as “key enablers” presenting “potential game-changing opportunities”. Research

into autonomous systems, sensors, and artificial intelligence is underway through the Defence Science and Technology Laboratory (DSTL) and the Defence and Security Accelerator (DASA) programme.

One example of such a research programme was the ‘Autonomous Systems Underpinning Research’ (ASUR)

programme, a joint industry / academia programme funded by DSTL and managed by BAE Systems. The aim of the programme was to develop a science and technology base to allow the production of intelligent unmanned systems for the UK’s armed forces. Among the programme’s outputs were a system to ‘hand over’ targets from high level to lower level drone systems; a computer system to plan and manage drone swarm missions, and a drone capable of landing in confined spaces by a perched landing, similar to the way birds land.

Paradoxically, civil sector research into artificial intelligence and robotics has a greater influence on the development of military technology than research funded directly by the military itself. This is because the main innovators in autonomous technology and artificial intelligence – the consumer electronics sector, internet companies, and car manufacturers – are in the civil sector. Research budgets and staff salaries in these companies dwarf those available in the military.

However, the military is keen to get a slice of the cake. According to General Sir Chris Deverell, Commander of Joint Forces Command and responsible for the UK’s military intelligence and information, “The days of the military leading scientific and technological research and development have gone. The private sector is innovating at a blistering pace and it is important that we can look at developing trends and determine how they can be

applied to defence and security.”

An example of the military use of civil sector information technology is Project Maven – a Pentagon project to use artificial intelligence to process drone video feed which uses image recognition software developed by Google among its algorithms. Encouragingly, employee pressure forced Google to withdraw from Project Maven, showing that scientists and engineers can successfully influence the development of authoritarian technologies. Google’s withdrawal from Project Maven was far from a token victory as the pressure from employees acted as a ‘line in the sand’ for the company. Partly as a result of ethical concerns, Google subsequently withdrew as a bidder for the US Department of Defense Joint Enterprise Defense Infrastructure (JEDI) programme – a billion-dollar contract to develop a cloud provider computer system for the US military. Google is a powerful innovator with considerable talent among its employees, and the Department of Defense is not happy about losing access to this expertise. Robert Work, former Deputy Secretary of Defense responsible for Project Maven, stated on the record that he was ‘alarmed’ at the prospects of Google employees making moral demands of this nature.

Not surprisingly, military contractors have been heavily involved in the development of autonomous drones. BAE Systems has developed a whole string of autonomous demonstrator aircraft, including the Taranis experimental stealth drone which is reportedly able to identify and attack targets autonomously. Qinetiq and Thales Group are other key players working on autonomous systems for the MOD, and to a lesser extent Lockheed Martin, Boeing, Airbus Defence and Science, MBDA are also involved.

Within academia, partnership work takes place in collaboration with military contractors who draw on specialist research facilities and expertise available in universities. BAE Systems and Thales, among others, have formal strategic partnership arrangements with certain universities. The EPSRC promotes co-operation between universities and the MOD in relation to AI – for example through the Alan Turing Institute, the national

institute for data science. With funding from ESPRC, five universities – Cambridge, Edinburgh, Oxford, University College London, and Warwick – have collaborated to form the Institute. One of the Institute’s core areas of research is defence and security, and it has entered into a strategic partnership with GCHQ and with the Ministry of Defence, through DSTL and Joint Forces Command.

Using the Freedom of Information Act, Drone Wars UK undertook a brief survey of collaboration between the Ministry of Defence and military contractors with a sample of university departments. Some examples of collaboration are shown in Table 1.

Table 1: University research on autonomy and drones funded by the MOD and / or military contractors

| University | Area of collaboration |

|---|---|

| Cranfield University | Autonomous systems |

| Imperial College London | Sensors and data analytics |

| Loughborough University | Autonomous systems |

| University College London | Imaging and sensors |

| University of Cambridge | Control and performance |

| University of Liverpool | Ship-launched drones |

The UK and autonomous weapons: the current state of play

The evidence indicates that far from having “no intention of developing” autonomous weapons, the Ministry of Defence is actively funding and engaged in research and development of technology which would allow weaponised drones to undertake autonomous missions.

The UK government, together with the governments of France, Israel, Russia, and the USA, has also explicitly opposed a proposed international ban on the development and use of autonomous weapons. The Foreign and Commonwealth Office has stated that, “At present, we do not see the need for a prohibition on the use of lethal autonomous weapon systems, as international humanitarian law already provides sufficient regulation for this

area”.

Drone Wars UK believes that the government should not be blocking steps to outlaw authoritarian technology of this nature. The UK should support the introduction of a legal instrument to prevent the development, acquisition, deployment, and use of fully autonomous weapons. In order to allow transparency over its own research in this field, the government should publish an annual report identifying research it has funded in the area of military autonomous technology and artificial intelligence. We would like to see MPs and Peers doing more to investigate the impact of emerging military technologies, including autonomy and artificial intelligence, and pressing the government to adopt an ethical framework to control their development and use.

As well as having potential military applications, artificial intelligence also has massive potential to transform the world for the better. The government should therefore fund a wide-ranging study, perhaps under the auspices of the Alan Turing Institute, into the use of artificial intelligence to support conflict resolution and promote sustainable security. Alongside this, the government should initiate a broad and much-needed public debate on the ethics and future use of artificial intelligence and autonomous technologies, particularly their military applications.

This article is based on a research study by Drone Wars UK, ‘Off The Leash’, which was funded by the Open Societies Foundation. The report including full references is available online at www.dronewars.net